Stable and efficient resource management using deep neural network on cloud computing

Dec. 2022. By Young-Sik Jeong

Keyword: Cloud computing, Resource management, Hybrid pod autoscaling, Resource usage forecasting, Attention-based Bi-LSTM

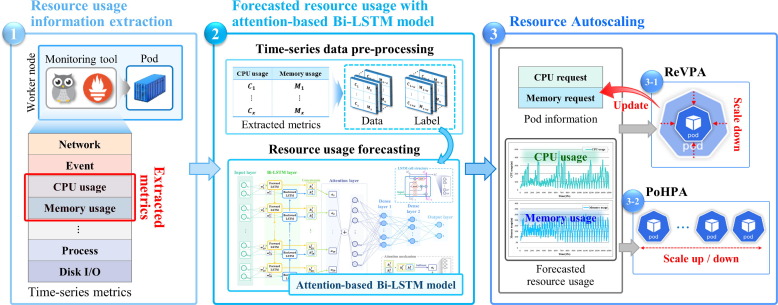

Resource management autoscaling in a cloud computing service guarantees the high availability and extensibility of applications and services. Horizontal pod autoscaling (HPA) does not affect the executed tasks but also has the disadvantage that it cannot provide immediate scaling. Furthermore, scale down is not possible if excess resources are allocated, because it is difficult to identify the amount of resources required for applications and services; thus resources are wasted. Therefore, this study proposes Proactive Hybrid Pod Autoscaling (ProHPA), which immediately responds to irregular workloads and reduces resource overallocation. ProHPA uses a bidirectional long short-term memory (Bi-LSTM) model applied with an attention mechanism for forecasting future CPU and memory usage that has similar or different patterns. Reducing excessive resource usage with vertical pod autoscaling (ReVPA) adjusts the overallocation of resources within a pod by forecasted resource usage. Lastly, prevention overload with HPA (PoHPA) immediately performs resource scaling by using forecasted resource usage and pod information. When the performance of ProHPA was evaluated, CPU and memory average utilization were improved by 23.39% and 42.52%, respectively, compared with conventional HPA when initial resources were overallocated. In addition, ProHPA did not exhibit overload compared to conventional HPA when resources are insufficiently allocated.